From the Item Bank |

||

The Professional Testing Blog |

||

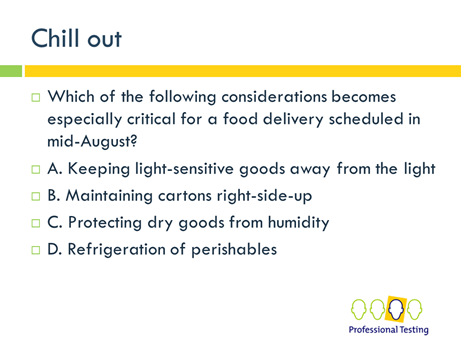

Avoiding (Bad) Discrimination in Licensing and Certification TestsDecember 5, 2015 | |Testing programs are built to discriminate. Licensing and certification tests, specifically, class people into two groups: those who receive the credential and those who do not. The idea is to discriminate on the basis of relevant factors (“Does the candidate now have the knowledge required to perform the task at the required level?”) and not on the basis of irrelevant factors – whatever they may be. AlignmentOn the most basic level, to stick to relevant factors, to avoid what I’ll call “bad discrimination,” the test must be fully aligned to the profession. If taxi drivers, for example, almost invariably find obscure addresses using GPS-related software, a test that asks candidates to find obscure addresses unaided engages in bad discrimination. It’ll advantage some natives over perfectly competent recent arrivals, and drivers who are good at memorizing over those who are not. What if a person offering hair braiding services only is required to have a full cosmetology license, and is tested on irrelevant skills? The requirement may protect licensed cosmetologists from some competition, but insofar as its role is to protect the public, it engages in bad discrimination. So the first line of defense against bad discrimination is a good JTA process and up-to-date test specifications. Sources of DifficultyThe next defense against bad discrimination is at the test-item level, where we should always consider why an item is hard or easy. An item writer who wants to know whether candidates can calculate the area of a circle drafts this stem: A knife is used to slice a lahmejun in half in a straight line. The line is 30 cm long. What is the area of each half? It’s pretty clear that the item is biased in favor of those who know the shape of a lahmejun. What if the item writer substitutes a universally recognized food like pizza? Does that solve the problem? Maybe not. A reviewer might note that pizzas are sometimes rectangular. In this imaginary scenario, I’d ask the lahmejun-and-pizza item writer two questions: First, is it time for a lunch break? Second, why are you avoiding the word “circular”? Is it to make the question harder? But any added difficulty comes only from people being confused about the shape of the object rather than the thing we are trying to test. That makes for bad discrimination. Much of the training given when subject-matter experts set out to write or review items is aimed at avoiding irrelevant difficulty and irrelevant ease. Irrelevant ease? I need not show you the picture that would come with this item for you to answer it correctly: The child is holding an A. apple. B. cherry. C. pomegranate. D. tomato. Language LevelSimplifying language is probably the single most useful step you can take to lower bad discrimination. To take an extreme case, consider this sentence, which I usually show when training subject-matter experts to write or review items: Which of the following nutrients is not absent from a cup of coffee when no milk is added to it? This is a great first draft. Now it’s time to change the question to Which of the following nutrients is present in a cup of black coffee? More broadly speaking, the level of language should match the level of education candidates are expected to have. Even where candidates are expected to be able to read at a high level, complex sentences, double negatives, and the like ought to be avoided wherever possible. DIF (Differential Item Functioning)In larger testing programs, it is possible to look at how, within a given overall ability level, members of different groups (e.g., women vs. men) perform on each item. Such information can inform item writing and review. There’s a body of research on what is called “differential item functioning” in the educational testing area. (See, for example, these two works by my former colleagues Kathleen O’Neill, W. Miles McPeek, and Cheryl L. Wild: “Differential Item Functioning on the Graduate Management Admission Test” and “Differential Item Functioning on the Graduate Record Examination,” both published in the ETS Research Report Series in 1993.) Not every lesson learned is applicable to professional testing, but an item-review facilitator familiar with this literature is a great asset to a program seeking to avoid bad discrimination. Your experience isn’t always universalOf the thousands of items written and reviewed over two decades, only a few have stuck in my mind. One of those was written by a brilliant colleague and friend for a test of critical reasoning. The item touched on a problem with childproof caps on medicine bottles. A medicine is prescribed to a grandmother. It turns out that she has a hard time with the childproof cap, leaves the bottle unsealed, and, oops, it is not even a little childproof any more. I thought the item biased. My colleague’s grandmother and mine were both in their eighties at the time, and we could readily picture them having difficulty with childproof bottles. But we were not a representative sample of the testing population. Besides, the question was about a toddler’s grandmother, not the grandmothers of adults like us. It turns out that in 2002, half of all grandparents in the United States were under 48 when their first grandchild was born. I don’t think the age of 48 conjures the image of someone unable to handle a childproof bottle. Indeed, using the category “elderly” as a proxy for “infirm” is not the best idea. Why not frame the question in terms of a person who might have had trouble with the cap by virtue of the very illness for which the medicine was prescribed? The issue, to be sure, is not whether some older people will be offended. The issue is that the candidate whose grandmother is a bodybuilder may have difficulty answering the question because he or she just doesn’t get it. The larger point is to understand that we cannot always generalize from our own experiences. And the problem is that we don’t always know when our experiences aren’t universally shared. The best solution is to have a group of item writers and reviewers who represent a cross section of the population from which candidates are drawn. There’s gender, race, and ethnicity, but also work setting (e.g., big vs. small firm; government vs. private vs. nonprofit), and geography. Speaking of geography, here’s a slide I like to show when training item writers and reviewers for tests that will be delivered worldwide.

It’s intolerably hot where I’m sitting this morning, but I have to remember that mid-August weather varies worldwide. And if I forget, I hope to have enough variety of experiences among my item reviewers that someone else will raise the issue. Categorized in: Industry News |

||

Comments are closed here.