From the Item Bank |

||

The Professional Testing Blog |

||

Test Adaptation: A Psychometric PerspectiveApril 14, 2016 | |The primary role of psychometrics in the adaptation/localization process is to provide evidence that target and source forms are equivalent and that they yield meaningful and interpretable scores. For such evidence to be compelling, it is imperative to establish defensible arguments of reliability, validity and fairness.

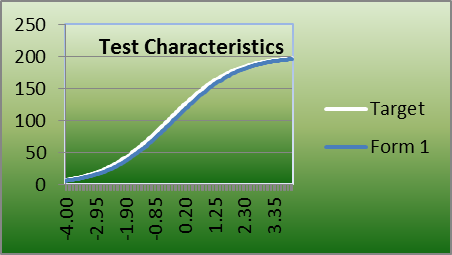

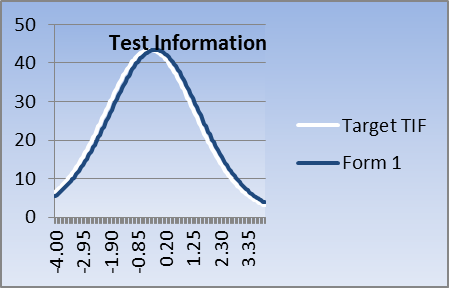

Psychometrics routinely touches all aspects of testing, from job/task/content analysis through score reporting. However, when tests are adapted for use in different languages and/or cultures, then the role of psychometrics expands to address issues endemic to adapted instruments. For example, it is possible that the target construct(s) are different or fail to exist in the target population. In such cases, decentering the source constructs may be necessary. Another challenge might be seat time: should the time allowed to take the adapted exam be the same as time allowed to take the source test? Sometimes the answer is yes, but other times the answer may be no. These are but two examples of the many psychometric challenges associated with adapting/localizing exam forms. Establishing source-to-target equivalence is mission critical when adapting/localizing a measurement instrument. Both test forms and examinee populations must be considered. To check for population equivalence, one may compare demographic and score data between source and target groups. To establish equivalence between measurement instruments, content, psychometric, and construct equivalence must be established. Content equivalence can be established by verifying the alignment between the exam’s blueprint and the number of operational items included on the exams. Assessing psychometric equivalence between source and target forms may include comparing technical aspects of operational forms, such as mean difficulty (classical and/or IRT), information at the cut (in the case of criterion-based exams), form-level reliability, exposure rates of the items on the forms, and fit in terms of target test information functions (TIF) and test characteristic curves (TCC) (see Exhibit 1).

Exhibit 1. Plots of source and target TIFs and TCCs Establishing construct equivalence may require the gathering of a wide variety of information, such as inter-scale correlations, goodness of fit indices, dimensionality studies, and plots of relevant data, such as person ability to item difficulty plots. In addition, reliability indices, such as KR-20 or coefficient alpha, separation indices (for Rasch-based programs) should be examined. And then there’s the issue of fairness. In addition to standard item analyses, differential item functioning (DIF) analyses need to be conducted to look for performance differences between the reference (source) and the focal (target) groups. The resulting information, perhaps coupled with qualitative feedback from examinees (open response fields or focus groups), can be used to screen for items that perform differently between equal ability examinees from different groups. This could be especially helpful when conducting time analyses, as seat time may differ between source and target populations. Psychometrics is but one component of an adaptation initiative. Other components may include logistics, test security, policy issues, and of course, a business argument. The following is a sample of commonly asked questions that should be answered (satisfactorily) before heading down the path of adaptation.

So while psychometrics provides the technical framework needed to support the adaptation process, at the end of the day, we need to have some assurance that potential benefits will outweigh expected risks and costs.

This post is the first in a two part series on Test Adaption. Tags: Adaptation, Test Adaption Categorized in: Industry News, Test Development |

||

Comments are closed here.